Results & Analysis

Performance evaluation across multiple chest pathologies

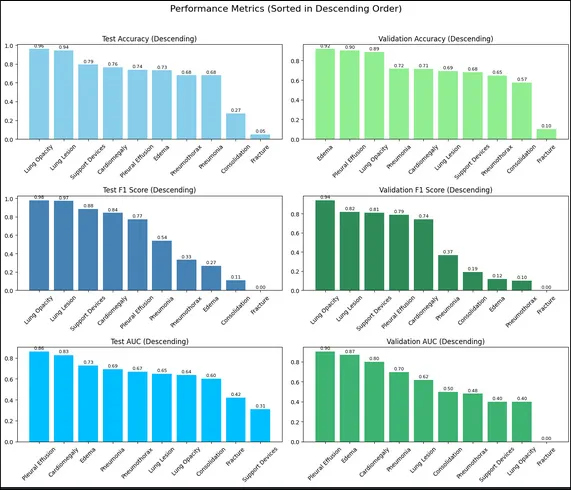

Our ResNet-18 model achieved varying performance across different pathologies, with notable success in detecting common abnormalities. The results demonstrate both the promise and challenges of automated chest X-ray interpretation.

| Disease | Accuracy | F1 Score | ROC-AUC |

|---|---|---|---|

| Pleural Effusion | 73.85% | 0.7733 | 0.8618 |

| Atelectasis | 76.47% | 0.8421 | 0.8279 |

| Consolidation | 73.17% | 0.2667 | 0.7270 |

| Pneumonia | 67.92% | 0.5405 | 0.6914 |

| Pneumothorax | 68.00% | 0.3333 | 0.6667 |

| Cardiomegaly | 27.27% | 0.1111 | 0.6000 |

Key Findings

Strong Performance on Common Pathologies: The model excelled at detecting pleural effusion (AUC: 0.862) and atelectasis (F1: 0.842). These conditions present clear visual markers that CNNs can reliably identify - fluid levels for effusions and collapsed lung regions for atelectasis. The high F1 score for atelectasis indicates balanced precision-recall performance crucial for clinical deployment.

Challenges with Rare Conditions: Cardiomegaly detection proved particularly challenging (accuracy: 27.27%), likely due to severe class imbalance in the training data. This pathology requires subtle assessment of cardiac silhouette size relative to the thoracic cavity - a task that benefits from more training examples and potentially ensemble approaches.

Report Generation Quality: LLaMA-3.2-11B-Vision successfully generated coherent diagnostic narratives that appropriately used medical terminology. The model learned to structure reports with findings, impressions, and recommendations sections. However, occasional hallucinations of findings not present in images highlight the need for human oversight in clinical settings.

Clinical Implications

Our results suggest that AI-assisted chest X-ray interpretation is approaching clinical viability for common pathologies. The system could serve as an effective initial screening tool, prioritizing cases for radiologist review and providing preliminary reports to accelerate workflows.

The performance gap between common and rare conditions underscores a critical challenge in medical AI: dataset representation. Future work should focus on targeted data collection for underrepresented pathologies and synthetic data generation techniques to balance training distributions.

Future Directions

Several avenues could improve model performance:

- Ensemble Methods: Combining multiple CNN architectures to capture diverse visual features

- Focal Loss Implementation: Better handling of class imbalance through modified loss functions

- Larger Vision Models: Exploring models like SAM (Segment Anything) for better feature extraction

- Multi-view Integration: Incorporating lateral views alongside PA images for comprehensive analysis

- Federated Learning: Training across multiple hospital systems while preserving patient privacy